Agentic AI is moving beyond simple chat interfaces. These systems can retrieve data, call tools, trigger workflows, and make decisions with limited supervision. That capability opens the door to real efficiency gains. It also creates exposure that feels different from traditional software risk.

Agentic AI is moving beyond simple chat interfaces. These systems can retrieve data, call tools, trigger workflows, and make decisions with limited supervision. That capability opens the door to real efficiency gains. It also creates exposure that feels different from traditional software risk.

When an AI agent can take action instead of just generating text, mistakes compound faster. And if an attacker finds a way to influence that agent, the consequences extend beyond bad outputs. They can affect systems, data, and operations.

Below are seven risks organizations should have on their radar, along with grounded mitigation strategies that actually make sense in practice.

1. Prompt Injection and Poisoning

Datadome can help reduce exposure to prompt injection poisoning, particularly when hostile automated traffic is involved in distributing manipulated content.

Prompt injection works because agents trust the inputs they receive. An attacker hides instructions inside content that appears harmless. When the agent processes it, those instructions become part of its working context. In certain setups, that can lead to data exposure or unintended actions.

Poisoning takes longer but can be just as damaging. If malicious data enters the pipeline repeatedly, it may influence how an agent responds over time.

Mitigation is layered. Validate inputs before they reach the model, restrict what actions the agent is allowed to perform, even if prompted, monitor outputs for abnormal patterns, and limit large-scale scraping or automated manipulation that could feed harmful content into agent workflows. No single control solves this. Defense has to happen at multiple points.

2. Privilege Creep inside Autonomous Systems

Agentic tools are often granted broad permissions because they need to be useful, such as access to internal documents, the ability to query databases, and permission to trigger processes.

Over time, those permissions can expand, a new integration here, a wider API scope there. If something goes wrong, that accumulated access becomes the real risk. An agent that can read and write across systems creates more surface area than one operating in a narrow sandbox.

The mitigation is discipline. Apply least privilege, review access regularly, and remove capabilities that are no longer essential. Logging should not be optional. If an agent performs an action, there should be a trace.

3. Data Leakage through Conversation Engineering

Not every breach looks like a breach. Attackers can guide an agent through a series of normal-looking prompts that gradually extract protected information. Instead of asking for sensitive data outright, they request fragments that can be assembled later.

This is especially relevant when agents connect to internal knowledge repositories.

Mitigation starts at the data layer. Tag sensitive information and enforce response restrictions and train agents to decline certain categories of output regardless of phrasing. Monitor for unusual query sequences rather than single suspicious questions. The pattern often tells the story.

4. External Content Manipulation

Many agentic systems rely on outside information like news feeds, vendor listings, and even public datasets. That reliance introduces risk.

There are public demonstrations showing how AI agents can be influenced by manipulated content. If an agent trusts external inputs without validation, it may act on false or malicious information.

Imagine an automated sourcing assistant selecting a supplier based on falsified credibility signals. Or a financial agent incorporating altered pricing data into decision-making.

The solution is simple in theory but harder in practice. Validate external sources and assign trust tiers. In higher-impact workflows, require corroboration before taking action. Autonomy should not mean blind acceptance.

5. API Misuse at Machine Speed

Agentic systems interact with APIs constantly. If credentials are exposed or the agent is manipulated, that interaction can become risky very quickly, with repeated calls, bulk extraction, and serious service disruption.

Mitigation here looks familiar to seasoned security teams. Strong authentication, narrow token scopes, rate limits, and anomaly detection tied to behavior rather than raw volume. When APIs are the engine behind the agent, they must be hardened accordingly.

6. Third-Party Dependency Risk

Few agentic deployments operate in isolation. They connect to SaaS tools, cloud services, analytics platforms, and external data providers. Each connection expands the trust boundary.

If one of those providers is compromised or begins delivering tainted data, the agent may process and act on it without recognizing the issue.

Organizations should evaluate partner security practices and validate incoming data streams. In sensitive environments, isolating external inputs in controlled execution environments adds resilience. Supply chain awareness matters just as much in AI as it does in traditional software ecosystems.

7. Governance Gaps and Informal Expansion

One of the less technical but equally serious risks is governance drift.

Agentic AI often starts as a pilot, a controlled deployment. Over time, more teams adopt it, more features are layered on, and access expands because it is convenient.

Without clear oversight, that expansion can outpace security review.

Mitigation requires structure. Define ownership and establish review checkpoints before expanding capabilities. Conduct adversarial testing to explore failure scenarios. Governance is not a blocker to innovation, it’s what makes innovation sustainable.

Final Thoughts

Agentic AI changes the equation because it combines decision-making with action. The system is no longer just producing output, it’s interacting with the environment. That interaction multiplies risk when boundaries are weak.

Organizations adopting agentic AI should think in layers. Restrict what agents can access, validate what they consume, monitor what they produce, and review how they evolve.

Autonomy can deliver real value. But without guardrails, it can also create blind spots that are difficult to detect until damage is done. The difference will come down to how seriously oversight is built into the architecture from day one.

Do You Want to Implement Business Best Practices?

You can download in-depth presentations on Agentic AI and 100s of management topics from the FlevyPro Library. FlevyPro is trusted and utilized by 1000s of management consultants and corporate executives.

For even more best practices available on Flevy, have a look at our top 100 lists:

These best practices are of the same as those leveraged by top-tier management consulting firms, like McKinsey, BCG, Bain, and Accenture. Improve the growth and efficiency of your organization by utilizing these best practice frameworks, templates, and tools. Most were developed by seasoned executives and consultants with over 20+ years of experience.

Readers of This Article Are Interested in These Resources

32-slide PowerPoint presentation

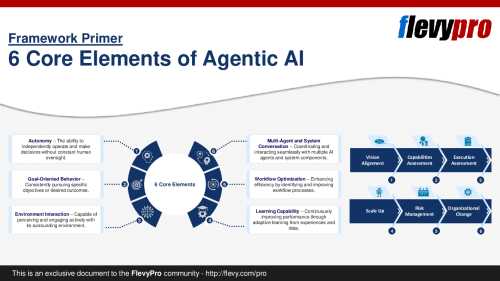

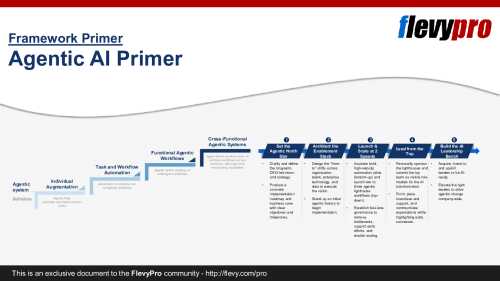

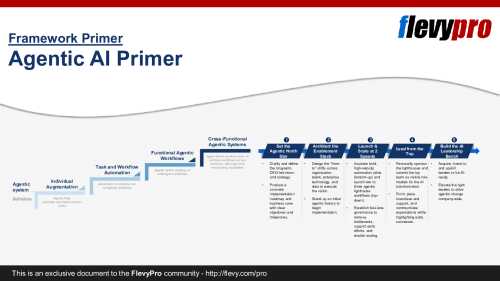

Agentic AI addresses a critical challenge in enterprise adoption: while most organizations achieve early success with individual models, few connect them into cohesive systems that transform business performance.

Traditional automation improves efficiency, yet it rarely redefines how decisions

[read more]

26-slide PowerPoint presentation

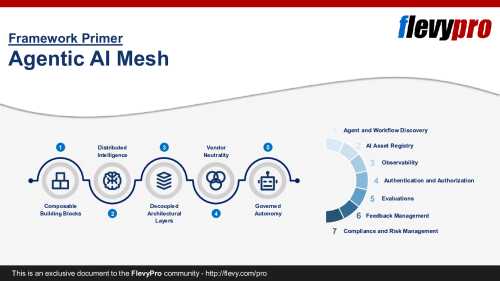

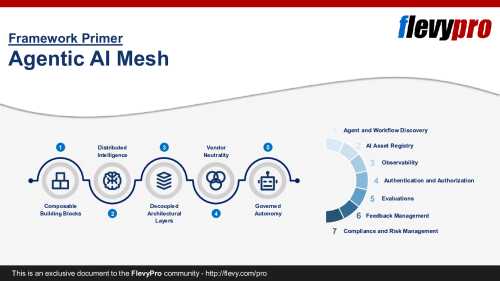

This presentation introduces a comprehensive governance model – the Responsible AI Model (RAM) Framework – designed to address the limitations of traditional governance methods in the era of Agentic AI.

As AI systems evolve into complex, multi-agent ecosystems with higher degrees of

[read more]

28-slide PowerPoint presentation

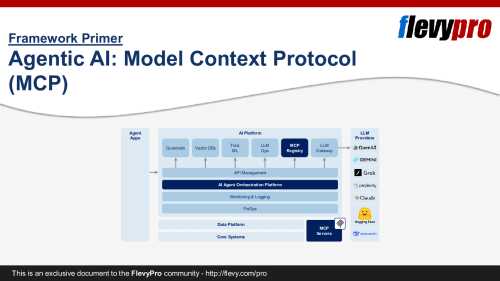

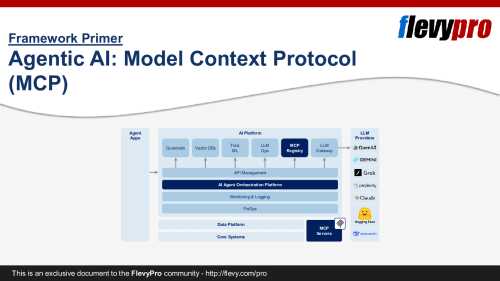

As AI agents spread across organizations, they need access to the tools where work and data already live. Connecting agents to databases, project management platforms, ERP suites, CRM systems, and similar applications remains a major integration hurdle.

This slide deck provides a detailed

[read more]

34-slide PowerPoint presentation

Organizations are moving beyond generative copilots toward agentic AI systems that can plan, act, and adapt across workflows. While this shift promises significant productivity and speed, it also introduces new challenges related to risk, integration, and rapid technological change. Unlocking value

[read more]

Agentic AI is moving beyond simple chat interfaces. These systems can retrieve data, call tools, trigger workflows, and make decisions with limited supervision. That capability opens the door to real efficiency gains. It also creates exposure that feels different from traditional software risk.

Agentic AI is moving beyond simple chat interfaces. These systems can retrieve data, call tools, trigger workflows, and make decisions with limited supervision. That capability opens the door to real efficiency gains. It also creates exposure that feels different from traditional software risk.