Responsible AI stopped being a thought experiment the moment AI systems gained real authority inside organizations. Leaders now wrestle with automation risk, ethical scrutiny, regulatory pressure, and internal trust gaps that can derail even the most promising AI ambitions. The Responsible AI Maturity Model offers a structured framework that turns aspirational ethics into operational discipline. It helps organizations avoid wobbling between overconfidence and anxiety and instead build Responsible AI practices that can stand up to public scrutiny and internal complexity.

Current trends make this more urgent. Generative AI adoption is exploding faster than governance structures can keep up. Boards demand transparency. Regulators tighten expectations. Teams move from pilots to production workloads without stable guardrails. The Responsible AI Maturity Model becomes a crucial template for navigating this turbulence because it gives leaders a shared language for progress, a roadmap for scaling responsibly, and a way to diagnose where their organization is fragile.

Responsible AI requires leadership commitment, structured processes, and a culture that supports fairness, accountability, and transparency. The model describes 3 dimensions that anchor maturity and organizes them into 5 progressive stages of evolution, from Latent to Leading. These stages help organizations understand what Responsible AI looks like in practice, where common pitfalls appear, and how to move from awareness to enterprise-scale execution.

As defined in the maturity model, there are 5 maturity stages:

- Latent

- Emerging

- Developing

- Realizing

- Leading

The framework is useful because organizations commonly misunderstand the amount of structure required to scale Responsible AI safely. Many assume a few principles or a small task force is enough. The maturity model exposes gaps that are normally invisible until something breaks. It replaces vague aspiration with a language that leaders, governance teams, and developers can use together. It also gives organizations a way to decide how much structure is appropriate for each AI system instead of applying the same degree of overhead everywhere. That flexibility matters because AI practices must adapt to context, not bury teams under bureaucracy.

Another reason this model delivers value is its emphasis on cultural integration. Responsible AI decays quickly when it is treated as an audit function. The model pushes leaders to integrate ethical review into product design, testing, deployment, and ongoing operations. It recognizes that Responsible AI is a living capability that must evolve with new risks, not a one time compliance win. This makes the maturity model a strategic tool instead of a regulatory burden.

The final piece of utility comes from how the model highlights the role of human judgment. AI governance cannot rely solely on checklists or automated tools. Ethical decisions are messy and contextual. The maturity framework reinforces the need for interpretation, context, and continuous learning so teams avoid treating Responsible AI as static doctrine.

Where Responsible AI begins to Take Shape

Let’s unpack the first two maturity stages because they often determine whether an organization will ever reach meaningful progress.

Latent

The Latent stage is the organizational equivalent of noticing smoke but not grabbing the extinguisher. People sense ethical risks but lack shared language, structure, or urgency. Leadership may mention Responsible AI occasionally, yet the organization behaves like risk will resolve itself. Teams respond only when something goes wrong, such as an external inquiry or an unexpected operational failure. Funding is nonexistent. Training is anecdotal. Processes are improvisational.

This stage matters because it sets the psychological baseline. When Responsible AI feels optional, teams treat it as intellectual décor rather than operational necessity. Leaders emerging from the Latent stage often realize that informal ethics do not scale. This is where the seeds of accountability start forming and early frameworks begin to appear.

Emerging

The Emerging stage is where Responsible AI moves from backstage whisper to conference room talking point. Leaders start expressing interest, task forces pop up, and draft policies circulate. Yet execution remains spotty because there is no clarity on ownership. Teams may experiment with risk reviews, and awareness training exists, but nothing feels required. Enthusiasm replaces consistency.

This stage often creates false confidence. Leaders assume progress because conversations increase, but gaps remain wide. Teams duplicate work. Ethical reviews occur too late in the development lifecycle. Momentum stalls because informal structures cannot support enterprise demands.

Emerging maturity becomes the pivot point. Organizations that institutionalize accountability move upward. Those that confuse conversation for capability stay stuck.

How Responsible AI grows into Organizational Muscle

Consider a real-world case that reflects the journey through these early stages.

A global consumer technology organization rolled out a recommendation engine to personalize customer experiences. Initially, data scientists flagged fairness issues privately, but there was no governance structure to examine or escalate the concerns. This reflected a Latent environment. After a consumer advocacy group criticized the system’s opacity, leadership formed an informal Responsible AI working group. Conversations multiplied. Principles were drafted. Teams shared lessons across Slack channels. But without formal roles or embedded processes, nothing stuck. The organization had entered the Emerging stage.

The turning point arrived when the CEO mandated Responsible AI checkpoints in all product reviews. Governance committees were appointed. Policies gained teeth. Training became standardized. This shift pulled the organization into the Developing stage, where structure started to anchor culture.

By aligning operational processes with Responsible AI expectations, the organization moved into Realizing. Teams now built ethical considerations into design planning instead of waiting for late-stage audits. Performance indicators for fairness and transparency entered leadership scorecards. Over time, the organization began sharing its practices publicly and contributing to external standards, placing it firmly in the Leading category.

This evolution demonstrates how the maturity model provides clarity in a messy environment. It shows leaders where to focus, what signals indicate progress, and how to maintain momentum.

Frequently Asked Questions

How does an organization know which maturity stage it falls into?

Patterns across governance, culture, processes, and leadership behaviors reveal the stage. If Responsible AI activity is reactive or sporadic, it sits lower. If it is embedded into planning and operations, maturity is higher.

Does every AI system need the same maturity level?

No. The model emphasizes aligning maturity expectations with system complexity and impact because not all AI carries equal risk.

What is the biggest barrier moving from Emerging to Developing?

Ownership. Without clear accountability and leadership commitment, early enthusiasm collapses into inconsistency.

Why is human judgment so emphasized?

AI ethics cannot be automated. Fairness and accountability decisions require contextual reasoning, trade-offs, and empathy.

Can maturity regress?

Yes. Leadership turnover, reorganizations, or new technologies can shift priorities and reduce capability maturity.

Closing Reflections

Leaders building Responsible AI capabilities face an uncomfortable truth. AI grows faster than organizational muscle. The Responsible AI Maturity Model gives structure, but organizations must do the uncomfortable work of turning intent into habit. The model doesn’t create discipline; it reveals where discipline must be earned. This requires leaders to resist the temptation to chase speed at the expense of reflection because ethical debt compounds faster than technical debt.

Another overlooked insight is that maturity depends as much on storytelling as governance. Teams adopt Responsible AI practices when they understand the “why,” not just the “what.” Leaders who connect Responsible AI to resilience, trust, and innovation find adoption accelerates. Leaders who treat it like compliance create reluctant participation and eventual decay.

Responsible AI maturity thrives when organizations embrace imperfection. The goal is not flawless performance; it is continuous learning. Teams that treat ethical issues as data points, not failures, become more adaptive, and that adaptiveness becomes the real strength. The model is a mirror. The hard part is being willing to look.

Interested in learning more about the steps of the approach to Responsible AI (RAI) Maturity Model Primer? You can download an editable PowerPoint presentation on Responsible AI (RAI) Maturity Model Primer on the Flevy documents marketplace.

Do You Find Value in This Framework?

You can download in-depth presentations on this and hundreds of similar business frameworks from the FlevyPro Library. FlevyPro is trusted and utilized by 1000s of management consultants and corporate executives.

For even more best practices available on Flevy, have a look at our top 100 lists:

Want to Achieve Excellence in Digital Transformation?

Gain the knowledge and develop the expertise to become an expert in Digital Transformation. Our frameworks are based on the thought leadership of leading consulting firms, academics, and recognized subject matter experts. Click here for full details.

Digital Transformation is being embraced by organizations of all sizes across most industries. In the Digital Age today, technology creates new opportunities and fundamentally transforms businesses in all aspects—operations, business models, strategies. It not only enables the business, but also drives its growth and can be a source of Competitive Advantage.

For many industries, COVID-19 has accelerated the timeline for Digital Transformation Programs by multiple years. Digital Transformation has become a necessity. Now, to survive in the Low Touch Economy—characterized by social distancing and a minimization of in-person activities—organizations must go digital. This includes offering digital solutions for both employees (e.g. Remote Work, Virtual Teams, Enterprise Cloud, etc.) and customers (e.g. E-commerce, Social Media, Mobile Apps, etc.).

Learn about our Digital Transformation Best Practice Frameworks here.

Readers of This Article Are Interested in These Resources

1084-slide PowerPoint presentation

Curated by McKinsey-trained Executives

Unlock the Future of Your Business with the Ultimate AI Strategy Playbook: 1000+ Slides to Master AI and Dominate Your Industry

In today's fast-evolving digital landscape, Artificial Intelligence (AI) is no longer a luxury -- it's a

[read more]

100-slide PowerPoint presentation

Artificial Intelligence has moved from experimentation to everyday operations across industries--customer, supply chain, finance, and tech.

Organizations that adopt AI systematically are widening performance gaps in speed, cost, and experience. In McKinsey's 2025 State of AI, 71% of

[read more]

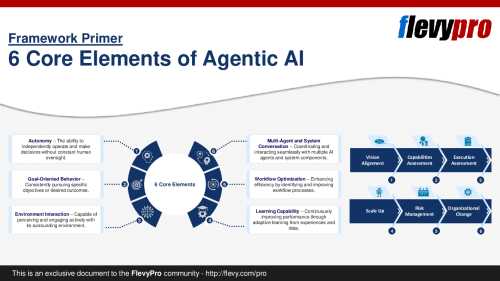

36-slide PowerPoint presentation

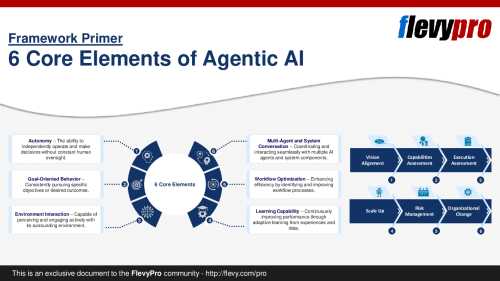

Agentic AI represents a shift toward autonomous, intelligent systems that can make decisions and take actions with minimal human intervention. Evolving from traditional machine learning, this technology enhances operations by automating complex workflows, optimizing decision-making, and enabling

[read more]

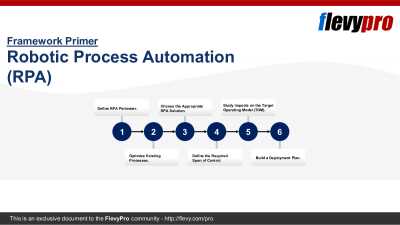

36-slide PowerPoint presentation

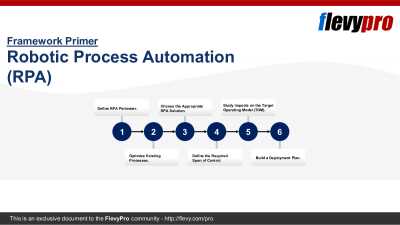

First, what is RPA?

Robotic Process Automation (RPA), also referred to as Robotic Transformation and Robotic Revolution, refers to the emerging form of process automation technology based on software robots and Artificial Intelligence (AI) workers.

In traditional automation, core activities

[read more]